How Netflix, PayPal, and other big tech companies scale their API development

Learn more about the technology allowing tech companies to scale their APIs while additionally increasing their development speed

Nearly all software products need an API at some point.

You either need your frontend to talk to your backend (that’s basically most products out there), or you want other people’s frontends or systems to talk to your product (Stripe is a prime example of such a product).

Creating APIs, however, can turn into both an art form and an organizational nightmare.

Here is why:

Good APIs need to be well-crafted. You can’t just create some REST endpoints without much design and call it a day. You need to put some real effort into them to make them usable and scalable.

Dedicated API teams usually create a middleware-layer between frontends and backends, which basically adds a whole additional iteration to your feature cycle. Instead of two iterations (first the backend, then the frontend), you now need three iterations until a feature of your product can make an impact for customers.

Companies like Netflix, PayPal, and many more, however, seem to have finally found a way to decrease the organizational burden of creating their APIs.

It’s the same technology I work with daily, nearly at the same scale as these companies, and in this issue, we are going to take a look at what exactly these companies do, and what we can learn from them. But first, we will take a look at common challenges when creating APIs before we dive into how you can solve most of them.

Setting the foundation

Before diving deeper, we should first set a foundational layer of understanding. In this case, you need to understand what type of APIs we talk about here, and which ones we don’t.

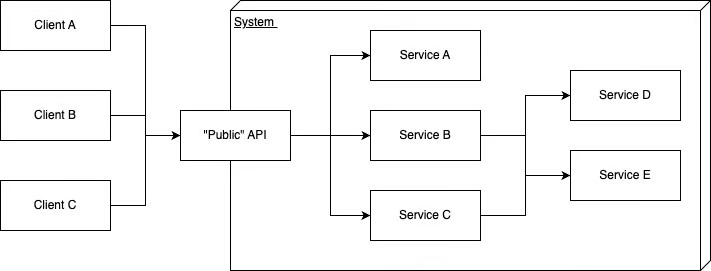

In this particular case, we talk about “THE” API between frontends and backends, so basically the entry point to any system.

The backend itself usually contains many more APIs on a micro-service-level, with many different protocols used, and a lot of inter-service-communication.

To get a better idea of what we talk about, take a look at the following image:

It’s exactly as stated. A more or less public API at the front of the system that connects backend and clients, and in this issue, we will focus on exactly this public-facing API.

Stated as simply as possible: We talk about the public-facing remote API of a system.

The issues with APIs

Creating APIs comes at a cost. To be even more precise, there are several issues you face and hurdles you have to take to get a good API up and running.

All companies building large-scale systems face these issues at some point, and we will now take a look at what these are.

1. Designing APIs is difficult

There are so many things you need to think about when you want to create a really good API, it’s mind-blowing. You need to think about

Usability

Scalability

Security

Maintainability

to only name a few. And even if you are done thinking about these, you still need to implement your API, which comes with its own problems once again.

Stripe is a great example of a company that puts a lot of work into its API (but that is no wonder because this API is Stripe’s product). Their API just “feels good”, and it’s easy enough to use, but that is also no wonder because Stripe’s engineers put a lot of work into creating it.

Stripe’s engineers go through many feedback loops until a new API endpoint finally gets released into the wild. This means that changes aren’t that easy or fast to make. Instead, doing it slowly and carefully is king in this particular scenario. That’s nothing for every company out there. Some of them want to iterate fast (or even faster), most probably also without compromising on API quality.

2. Creating APIs is even more difficult

When creating an API, you usually also face organizational issues. Who designs the API? Who implements it? Who runs it? Who maintains it? (You get the point)

In many cases and for many companies, the public-facing API is created by a dedicated team, which creates a new layer of responsibility, and a few more issues.

The API team gets its requirements either from the business-side (product managers, e.g.) or client teams (who want to implement features in their clients, which they need new or additional data for). It basically looks like shown in the following image:

Every new feature you want to introduce needs to run through these three layers, usually in the following order:

The backend needs to add new data or new endpoints

The API team needs to incorporate the new data or endpoints into the public-facing API

The client teams need to use that data to create their features

You could now argue that all teams can still work on a feature concurrently, but there is still some delay between getting a basic idea of how to implement something and providing mock data and/or mock endpoints for other teams to use. Nevertheless, the feature is only finished when all teams are finished.

On the other hand (and to be fair), having a dedicated API team also has one crucial advantage: You have exactly one team that needs to know how to create great public-facing APIs. That’s a fact you also should not underestimate.

3. Increased mobile usage creates even more requirements for APIs

Nowadays, the world is mobile, and mobile-usage is only going to increase in the future. Everyone I know has at least some form of smartphone, and they use it regularly. Chances are thus high that most users of any product are going to use exactly that product from a mobile device sooner or later.

We don’t have mobile-first web design for no reason. Love it or hate it, but you will most probably have to deal with mobile users if you don’t want to exclude quite a few potential customers from your product. Most products even have a mobile app for exactly that reason (because people somehow like “native” apps more than mobile websites).

Mobile websites and apps have an issue, though: They often need data from your backend, and mobile coverage isn’t always the best. Additionally, not all countries have massive amounts of data for a reasonable price, which puts additional constraints on your design. You can’t let your users fetch megabytes of data only to display a small portion of it. This over-fetching is a common issue of REST APIs.

Lastly, the times are over where you could get away with only a web client and one mobile client for iOS and Android each. Depending on what you build, you could even need somewhere around twenty or more different clients.

Believe it or not, but Netflix, for example, really has to deal with twenty or more different clients. As a streaming service, they not only live on the web and on mobile devices, but they also need to provide content on Smart TVs (Especially Smart TVs are very fragmented. Every manufacturer has their own OS with different constraints), Google Chromecast, Amazon FireTV Stick, Android TV, and more (I know what I’m talking about because I also work for a pretty large streaming service provider).

It’s difficult to build a single API that serves so many clients. Clients that all potentially have slightly different requirements of data or ways to get that data. A general-purpose API quickly becomes too inflexible.

If general-purpose APIs don’t do the job, what then?

To serve so many different clients with slightly differing requirements you would usually have to build the ultimate general purpose API. But that’s nearly impossible.

Your API would probably consist of many different routes/endpoints and often duplicate logic, only changed slightly, so all clients get the data and functionalities they need. This quickly gets out of hand.

That problem isn’t new, though, because Sam Newman already wrote about the so-called Backend-for-Frontend (BFF) pattern in 2015. (To be honest, this pattern had already been applied prior to Newman’s article, but he was the first one to talk about it publicly.)

The general idea goes as follows:

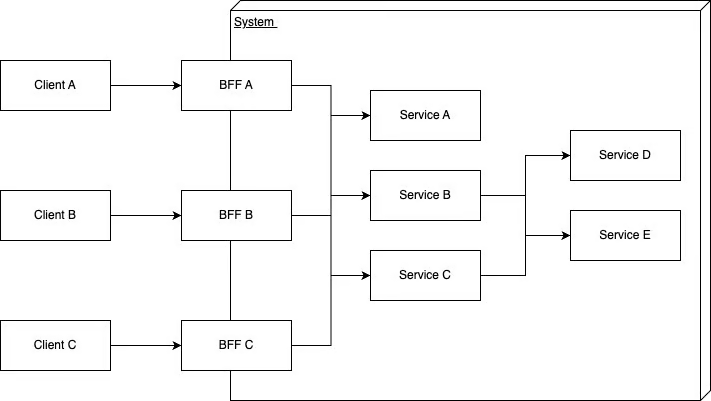

Every client with its special needs gets its own API, called Backend-for-Frontend, which basically looks like this:

If your iOS app wants to fetch data differently than your web app, you only need to add a special endpoint in the iOS BFF. The requirements of your iOS app don’t affect your web app because it’s a totally different API that needs to be adjusted.

This solves one of the many issues: Delivering exactly the data a client needs because all APIs are tailored to the needs of that specific client.

Instead of creating different endpoints for specific clients in a general-purpose API, you assign dedicated teams to each BFF (or even let full-stack engineers build both the client and their own API). This increases the flexibility of your API(s) and additionally eliminates (or at least lessens the impact of) the bottleneck a single API potentially creates.

(The pattern works well, as Soundcloud, for example, has successfully proven in the past.)

At that time, other companies also had issues with different clients and varying requirements for their APIs. To tackle the problem with traditional REST APIs and mobile apps, Lee Bryon, Dan Schafer and Nick Schrock invented GraphQL internally at Facebook in 2012 (they got the idea when working on a redesign of their iOS app). In 2015 Facebook open-sourced GraphQL, and since then, GraphQL has seen massive adoption throughout the industry.

GraphQL solves an issue Backends-for-Frontends create: Instead of having to provide multiple APIs for different clients, you can shrink your API layer down to one again. At the same time, each client can fetch exactly the data it needs. So you can basically hit two birds with one stone. Not bad, isn’t it? It doesn’t matter whether your GraphQL entities have 50 or more fields. Clients only fetch what they really need.

But GraphQL comes at some of the costs of a general-purpose API (or let’s better say it reintroduces it): You are once again down to one (or less common two or more) teams that work on that single API. Chances are high that the resources you allocate to your GraphQL API are still not enough to serve the needs of all stakeholders at the same time. You have reintroduced a bottleneck.

The architectural pattern allowing Netflix, PayPal and other big tech companies to evolve their APIs into something far better

If a general-purpose API is too inflexible and creates a feature bottleneck, BFFs take too much work, and GraphQL itself reintroduces the feature bottleneck, what then?

Time to finally take a look at how big tech companies evolve their APIs and solve 95% of problems that all other solutions we have previously taken a look at create or cannot eliminate.

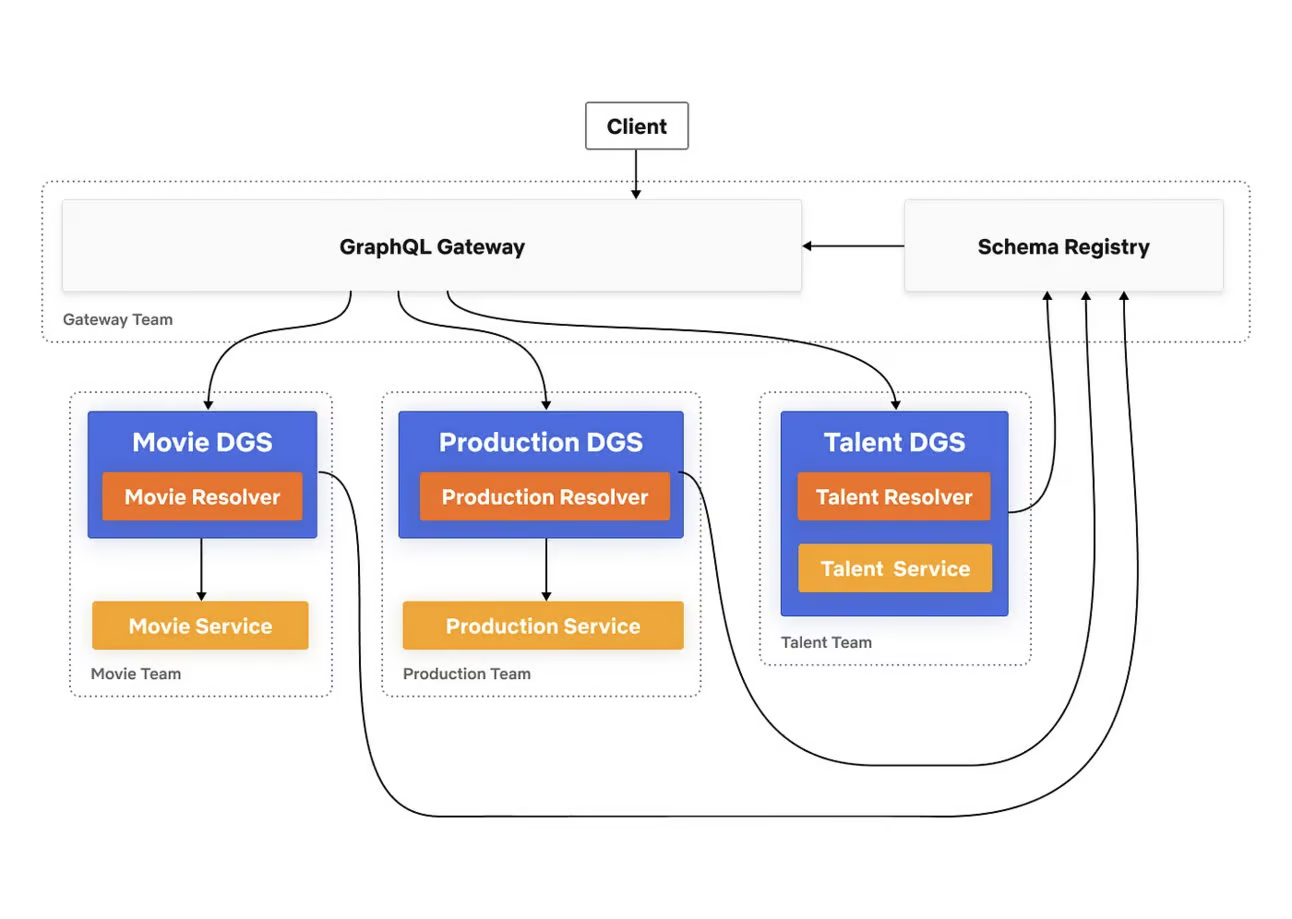

The answer is an architectural model and protocol addition to GraphQL itself, called GraphQL Federation.

GraphQL Federation is also known by the name Apollo Federation, which includes the name of the company that develops the architectural model (partially together with Netflix) and offers commercial and open-source software based on it, Apollo GraphQL.

GraphQL Federation brings something to the table that completely eliminates the need for a middleman: It allows engineers to directly attach backend services to the API, automatically, without the need for a team that does the grunt work of connecting clients and backends.

Instead of creating micro-services that provide RESTful APIs, or leverage gRPC, Thrift, or whatever else seems fitting, teams can directly implement their APIs using GraphQL. All changes to their own APIs are reflected on the public API as soon as that team updates their public schema. This completely eliminates the middleman.

Conceptually, Federation consists of three parts:

Well-designed additions to the GraphQL spec in the form of specific directives (directives are like annotations in Java or decorators in TypeScript).

A schema registry where teams of individual services upload their GraphQL schema to and clients, as well as a central component, can fetch an aggregated single schema from.

A central component, called Router, that dismantles queries, creates a query plan, and calls multiple backend services (called subgraphs) under the hood. All individual responses by subgraphs are then reassembled into a single response.

But let’s take a closer look at what these are and how they work.

1. Federation-Specific Directives

Conceptually, nothing changes when working with Apollo Federation. A graph always consists of a well-defined set of types, queries, mutations and (recently added) subscriptions.

A type is like an entity, some form of data your graph offers. It consists of simple fields or sub queries, which are like remote methods that any client can call.

This is an example of a simple entity that models a blog post:

type BlogPost {

id: ID!

title: String!

description: String

author: String!

publishedAt: DateTime!

lastUpdated: DateTime

}And this is an example of a corresponding query:

type Query {

# fetches a single blog post by its id

blogPost(id: ID): BlogPost

}In a federated graph, entities can be accessed between graphs. In the above case, a blog post could be provided by a blog post service. Blog posts usually have comments, and these might be handled by a completely different team, with their own micro-service and their own data storage.

In this case, the schema for a comment could look like this:

type Comment {

id: ID!

author: String!

text: String!

}In a “classical” GraphQL API, the API service would implement the schema for all types and fetch the corresponding data from different services if certain fields are requested. The comment schema above would move into the original schema and be provided by the one single API service. In a federated API, however, things are a little different, and this is where federation-specific directives come into play.

Each subgraph (every service attached to the federated graph with its own schema) can mark certain entities as “accessible by other graphs”. It does so by adding the key directive to every type it wants to make accessible by others.

In the scenario from above, the comment schema would look as follows:

type Comment @key(fields: "id") {

id: ID!

author: String!

text: String!

}The only addition is the key directive next to the name of the type. It just makes one statement: “You can uniquely identify this type by one field, namely id.” This makes the Comment type a federated entity.

If the blog post service now wants to include a Comment in its schema, it can do so by simply referencing it as follows:

type BlogPost {

id: ID!

title: String!

description: String

author: String!

publishedAt: DateTime!

lastUpdated: DateTime

comments: [Comment!]

} This is everything. The BlogPost itself needs no key directive if no one else must be able to include it in their types, and it can simply include the Comment type within its own schema. The only thing the providing service of a federated entity needs to do, is to implement a special method that allows the federation infrastructure to fetch one or multiple entities by the fields specified within their key directive. Everything else is handled by the rest of the federated GraphQL infrastructure.

This also eliminates a primary need of inter-service communication in a system. The communication is implicitly done by the Router in the front. Services themselves don’t necessarily need to talk to each other if they are connected through a federated graph. If a client fetches multiple entities that are connected through the schema, the Router in front of the system will issue the corresponding requests to fetch all necessary data from multiple services.

Theoretically, other subgraphs can also extend existing entities with single or multiple fields, without providing new types themselves, and there are also ways to make certain fields resolvable by multiple subgraphs, or override them. These are lesser-used directives, however, so looking at the key directive specifically shall suffice to give you a general idea.

2. Schema Registry

The schema registry is a central part of a system that leverages a federated graph.

Every subgraph (remember, a micro-service that offers its own partial GraphQL endpoint) uploads its schema to the registry. The registry then takes all available schemas and wires together two new schemas:

An API schema

A supergraph schema

The API schema is the public schema that all clients use to communicate with the GraphQL API itself. This schema doesn’t contain federated directives and only provides what is really necessary for clients to generate types and clients from. It contains all types, interfaces, queries, mutations, and so on from all subgraphs.

The supergraph schema is a special schema that has the same contents as the API schema, but it additionally contains federated directives and special control directives, which are used by the Router to create query plans with. This schema is usually not public.

3. Router

The Router is the entry point to a federated graph. All clients send their GraphQL requests to this component, which then uses the supergraph schema to create a dedicated query plan.

First, a large query is split into multiple different queries (if necessary). Then the resulting subqueries are sent to the respective subgraphs. After that, all individual responses are stitched back together into a single, unified response, which is then sent back to the client.

The Router also takes care of handling errors that inevitably happen in distributed systems. Sometimes, errors from subqueries can be aggregated into the final response, and sometimes if the schema doesn’t allow for it (non-null values missing due to errors, for example), the whole request needs to result in an error.

Architecturally, you can imagine the Router being placed in a system as follows:

How Federated GraphQL helps Netflix, PayPal, and many more with creating APIs at scale

Now that you have a general idea of how GraphQL/Apollo Federation works, it’s time to take a look at what exactly it offers that makes it such a valid alternative to other solution that big tech companies go all-in on it.

First of all, it’s still GraphQL. GraphQL’s huge advantage over REST is that any client can exactly specify and fetch which data it wants and really needs. Backend or API teams don’t need to deal with any of this. They can simply provide data they have available and even extend their types on-the-fly without breaking anything, decreasing the performance, or even increasing the network overhead. No more over-fetching.

Second, it eliminates the bottleneck a centrally maintained API creates. Features don’t need to be worked on by additional API teams. As soon as a backend service or multiple services have implemented a new feature, clients can begin fetching the new data or using the new remote procedures. Changes and fixes can be reflected way faster. This is a massive speed increase.

Third, it’s still GraphQL (yes, again), and this comes with human-readable schemas that are way faster to scan and understand than OpenAPI specs for RESTful APIs. That’s a huge advantage of GraphQL schemas. They were designed with humans in mind.

Fourth, by spreading the API all over the backend, every engineer working on a backend service integrated into the federated API also becomes an API engineer. It may not sound like much, but frontend and backend engineers have always had very differing views about usable APIs. More often than not, backend engineers simply expose their database schema as their API schema and call it a day. In 99% of all cases, this results in a very poorly usable API. Frontend teams often imagine different, more usable APIs, with better user/developer experience. In a federated GraphQL API, backend engineers can be held responsible and can’t hide behind the fact that someone still has to build a facade (the public-facing API) and call their API under the hood.

Now that you know what GraphQL Federation offers, it’s time to take a look at some case studies to understand how two selected companies use GraphQL at scale; with hundreds or thousands of services and billions of requests a day.

The story of Netflix and GraphQL Federation

As a GraphQL Federation user (and contributor) of the first hour, Netflix had already used GraphQL very early. But interestingly, Netflix began using GraphQL Federation in a not-so-public system first: Its Studio API.

Netflix has been producing original content for quite a while, and a large portion of Netflix’ staff works on these original productions. A large chunk of Netflix engineers also work on these systems that help Netflix staff manage these productions, from the very first creative pitch to post-production and the final release of a movie or series.

All these workflows are handled by the Studio API, which allows custom clients to be built on top of it. Thanks to the flexibility of GraphQL, these clients range from mobile clients for creative scouts, to web or desktop apps for Netflix Studio employees doing their work in the office.

The Studio Graph consists of at least three services or “domain graph services” (as Netflix calls them), which all model a partial but important part of the overall domain.

Next to its Studio API, Netflix also started working on migrating its mobile clients from its API framework Falcor over to Federated GraphQL in 2022.

Prior to this, the API was maintained by a single team that used the Java Version of Falcor to implement the mobile API. But this created exactly the issues you have already read about. The API team always had to manage communication between both involved parties (frontend and backend), and additionally had to catch up on domain knowledge to understand the needs and desires of its stakeholders.

After realizing the potential gains of GraphQL over their custom implementation Falcor and the added productivity gained by directly connecting backend to frontend engineers, Netflix undertook a very interesting migration (which is a story too long to cover here, but feel free to read about it, it’s worth it) with zero downtime and a lot of A/B Testing, Replay Testing and Sticky Canaries.

Since June, 2023, all of Netflix’ mobile traffic is served through a single Federated GraphQL API, with globally distributed instances of Netflix’ custom implementation of a Router. We don’t know exactly how many subgraphs the whole graph contains, but we can safely assume that it’s a few hundreds at least.

Next to this, Netflix invests heavily into GraphQL by building its own GraphQL infrastructure. Engineers are working on at least a custom Router, a custom Registry, and have also open-sourced their personal Spring-based DGS framework.

The story of PayPal and GraphQL Federation

PayPal’s journey into GraphQL started in 2017, when they faced a growing problem: Their Checkout was powered by a RESTful API, or better, multiple RESTful APIs: Backends-for-Frontends. Core teams built the system in the backend, creating APIs with different protocols, not caring much about how that data would be used. Multiple other teams would then build BFFs for specific clients or products.

A very crucial issue PayPal’s engineers noticed at that time was a lot of repeated low-value boilerplate code in all BFFs. Data had to be fetched, filtered, mapped, and sorted over and over again. This used up valuable time that engineers could have used to do what they were actually meant to do: Building (UI-) features.

Initially, PayPal’s Web Platform team built a monolith GraphQL API, as a proof of concept. With a lot of success and many issues solved, the team soon realized that building an API themselves would not suffice forever. This is when they stumbled upon GraphQL Federation and started to champion the architectural pattern and technology internally.

Soon after, PayPal started migrating over to GraphQL Federation and has added more and more services to its Graph throughout the years. We have no explicit data on how many subgraphs the PayPal graph contains, but we can approximately assume that it must be hundreds right now.

PayPal’s engineers have since been quoted praising federation and its added benefits, as it increases development speed of new features and frees capacity to work on more important things than dedicated APIs.

As far as we know, PayPal has made GraphQL its default choice for all new UI apps. With over 50 apps and products already connected to the graph, this number will only grow. Additionally, some of its core services, Identity, Payments, and Compliance have fully migrated to GraphQL. Additionally, PayPal even offers a public GraphQL API to interact with its services.

From some of PayPal’s blog post, we know that PayPal has no dedicated GraphQL infrastructure team. They leverage Apollo’s platform and use the components offered by its product GraphOS.

You have (finally?) come to the end of this issue, so let me tell you something:

Thank you for reading this issue!

Although this issue was incredibly large, I still hope you found it useful.

And now? Enjoy your peace of mind. Take a break. Go on a walk. And if you feel like it, work on a few projects.

Do whatever makes you happy. In the end, that’s everything that counts.

See you next week!

- Oliver